|

Python |

|||||

|

PyTorch - Training a Net

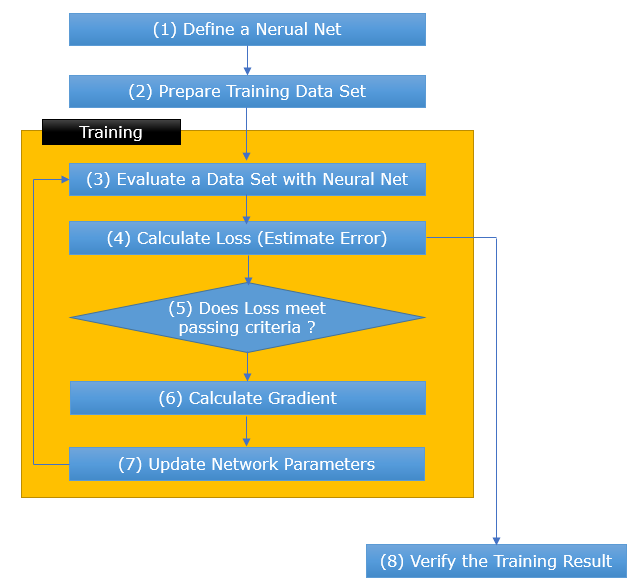

Whatever kind of neural network you are trying to implement, the overall procedure is similar as illustrated below. What I am going to show you in this note is to implement a very simple neural network (probably the simplest network) and highlight the training procedure of the network. For generic description of each of these steps, refer to the note : How to Build.

Following is the definition of the neural network(i.e, Step (1)). The neural network in this example is to find the best coefficient a and b of the linear equation y = a x + b. If you are not familiar with the structure of the neural network class, refer to the note nn.Module. class LinearRegression_i1_o1(nn.Module): def __init__(self): super().__init__() self.linear = nn.Linear(1,1)

def forward(self,x): o = self.linear(x) return o

What I am going to do next is to prepare the data to be used for the training of the network. In this work, I just prepared for 3 sets of x_data and y_data as shown below. This corresponds to Step (2). x_data = torch.Tensor([[1.0],[2.0],[3.0]]); y_data = torch.Tensor([[3.0],[5.0],[7.0]]); Now let's instantiate the neural network class. We will evaluate data set with this instantiated network (named as 'net'). net = LinearRegression_i1_o1() Before we start training, we need to determine how to define the loss which will be used as criteria on how well the network is trained. The lower value the loss value converges, the better the network is trained. Basically the loss value is based on the error (the difference between the calculated output value and the desired output value). The loss value is usually a function of the error value. There are various types of 'function of error' being used in neural network. Determining which type of function to be used for the loss function is a very important step of neural network implementation. You would need some domain knowledge and try-and-error to pick the best the loss function for your network. NOTE : There are various types of loss estimation function supported by Pytorch. You can find the list of those loss function here. criterion = nn.MSELoss(reduction='sum') There is another step you need to do before you start training. It is to dermine how to update the weight in the network at each training step. Basically this is based on Gradient Decent method, but in reality it modifies the algorithms in various way to speed up the calculation or better convergence to the solution. Like loss function, You would need some domain knowledge and try-and-error to pick the best the loss function for your network. NOTE : There are various types of loss estimation function supported by Pytorch. You can find the list of those loss function here. optimizer = optim.SGD(net.parameters(), lr = 0.01) Following lines are for the training of the neural network. It just follows the steps shown in the diagram at the beginning of this page. for epoch in range(100): y = net(x_data); # This is to evaluate a given input data. It corresponds to Step (3) loss = criterion(y,y_data); # This is to estimate the loss. It corresponds to Step (4) optimizer.zero_grad(); # This is to initialize(make it zero) the gradient values. loss.backward(); # This is to calculate the gradient value, which corresponds to Step (6) optimizer.step(); # This is to update each weight vector based on the calculated gradient (Step (7)) print('epoch = ',epoch, ',' , 'loss = ',loss.item()); NOTE : In Pytorch, it is recommended to initialize the gradient vector using optimizer.zero_grad(); before you calculate the new gradient value. This is to prevent the value stored in previous iteration from affecting the new value.

|

|||||