|

Machine Learning |

||

|

How to Build ?

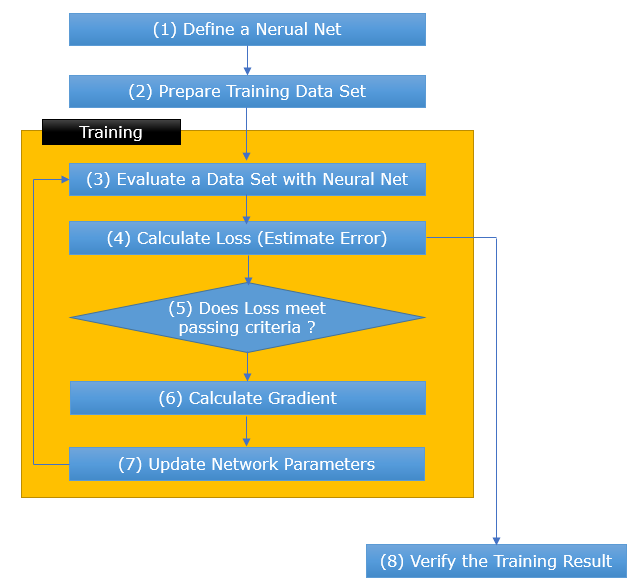

If you get interested in Neural Network/Deep Learning type of machine learning to the point where you want to implement a neural network on your own, you should be familiar with the following flow. But don't try to memorize this flow. As in most of learning a new field, rote momerization does not help much. Just read materials and watch the related video as much as possible and then take a quick look at this flow and see how clearly each of the steps in the flow make sense to you. As you repeat this practice (i.e, study/make practice and then take a look at this flow... again and again), this flow would gradually become a part of your natural though process. I myself is not an expert in this field and still at the early stage of the learning curve. When I first started studying on this field several month ago, this flow did not make clear sense to me. But by spending several month of study and practice (but not full time... just as a hobby), now I think I am gradually getting familiar with each of these steps. Often I keep a YouTube video running on this topic while I am trying to get sleep at night... just listening without watching the video. When I started studying this field several month ago, it didn't help me with sleep because I always had to get up and watch the video since just listening the audio didn't make much sense to me. However, now getting more familiar with this process .... just audio from YouTube start making sense to me. Without watching the slide or source code being played on the video, my brain start associating the audio visually with corresponding step in the following procedure. and I helps a lot with my sleeping problem as well -:).

In this note, I will try to share my personal experience on how to / what to study for each of the steps in the flow. I don't think my way is the only right way to do it and you may think it would take too long time to learn in my way... it may be true, but just hope this note may be helpful for some people.

Study for Step 1 : Define a Neural Network

When I started studying this field (even now Nov 2020), developing any application software using Neural Network is not my goal. My main motivation is to understand how it works as in detail as possible. I am especially attracted to this field because my educational background was biology. Genetic engineering and Neuro Science was my special interest back then. Even though I have beeing working engineering for most of my career, my interest on Neuro Science is still with me .. now as a hobby. Naturally my interest started with how a each neuron is implemented in neural network. As a ex-biologist, how neuron works in biological system is pretty familiar concept and wanted to understand how the biological concept is implemented in software. The result of searching through a lot of documents, papers, YouTubes were several note written in my own words and intution as below.

If you have to build every neural network only with a bunch of perceptron and matrix, you would not get much further because very soon it gets to complicated for you to manage. Most of the Neural Network software package (like Pytorch, TensorFlow, Matlab NN package etc) provides simpler way of building/defining a complicated neural network in easy way. I picked Pytorch and Matlab ML package for my preferred tool and followings are the notes from my own practice.

Study for Step 6,7 : Calculate the Gradient and Update the network parameters

These steps are the key procedure for training the network. The fundamental algorithm for this step is 'Gradient Decent' method. I think I am pretty familiar with the mathematical concept of Gradient Descent and wrote a note for it. However, just understanding the fundamental concept of Gradient Descent algorithm would not be sufficient for the professional application. There are many other variations for the gradient calculation and most of the Deep Learning packets support a variety of the algorithm. In case of Pytorch, for example, multiple algorithms are supported as listed below.

What I need to learn further is to find clear answers to following questions. i) Why the original Gradient Descent algorithm would not be enough ? (i.e, why we need to consider so many different variations of the algorithm ?) ii) What are the exact differences among those algorithm ? iii) How do we figure out which algorithm to be used for the specific Deep Learning Application that I built ?

Reference :

[1] Learning Rate Decay (C2W2L09) [2] Adam Optimization Algorithm (C2W2L08) [3] Adam Optimizer or Adaptive Moment Estimation Optimizer [4] Stochastic Gradient Descent

|

||