|

Python |

|||||||||||

|

CUDA

CUDA stands for Compute Unified Device Architecture. It is a tool made by NVIDIA that lets computer programmers use NVIDIA's graphics cards to do calculations. Normally, graphics cards are used to process images and videos, but with CUDA, they can be used for all sorts of calculations including calculations for Machine Learning.

It is a parallel computing platform and application programming interface (API) model created by NVIDIA. In other words, it works like a translator. It takes the instructions written by programmers and translates them into a language that the graphics card can understand. This allows the graphics card to do many calculations at the same time, which can make programs run faster.

In CUDA, the instructions for the graphics card are written in small pieces called kernels. Each kernel is like a mini-program that can be run many times at once on the graphics card. This is how CUDA makes programs run faster: by doing many things at once.

The purpose of this note is not for CUDA technology itself. My personal interest is just to use the graphics card in my laptop with CUDA framework from Python. So most of the contents are mainly for software setup process to make the graphic Card to work with Python in CUDA framework.

Get CUDA Compatible Graphics Card

Before I purchase my new laptop and I wanted to have it with a graphics card that has CUDA compatibility. So the first step was to search on what kind of laptop graphics card are CUDA compatible. In May 2023, I first checked with Bing Chat and asked to give me a list of laptop Graphics card that are CUDA compatible. It gave me a short list of the graphics card that lead me to the site : https://developer.nvidia.com/cuda-gpus which provides official information from NVIDIA.

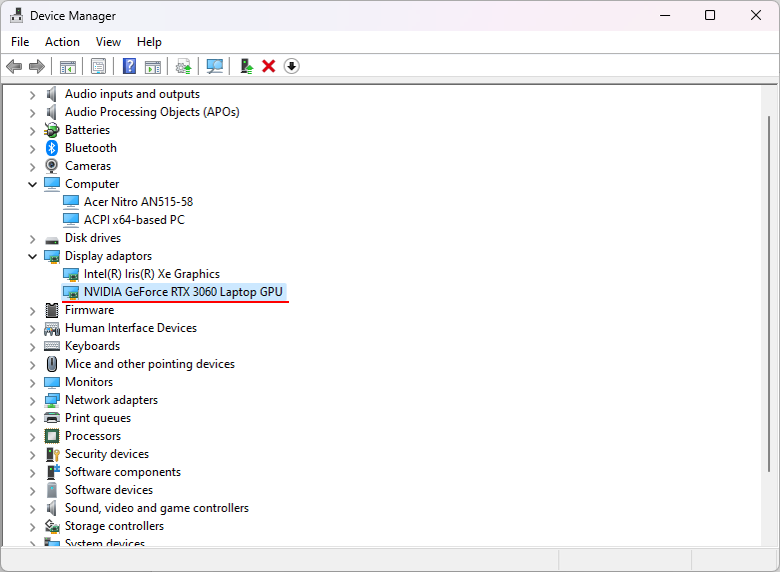

Finally the laptop that I decided to buy is the one that has the graphics card as shown below.

Even if you have CUDA compatible hardware, it doesn't mean that you can use it right away. You need a set of software component that are required to make the hardware work. In short, there are two major software component as follows :

Sound simple ? It just SOUND simple, but I think most part of the CUDA setup problem you would encounter may be related to these two components.

When you get these software ready on your PC, there are also a few things you need to make it sure as follows.

The first item can easily be done. Just download the necessary package and install it, but many of the problem is related to 2nd or 3rd items. Ideally just installing the software should do the 2nd and 3rd automatically, but in many cases it doesn't seem to be the case.

The simplest way to check if the compilers are installed and the path for the compilers are properly set is as follows :

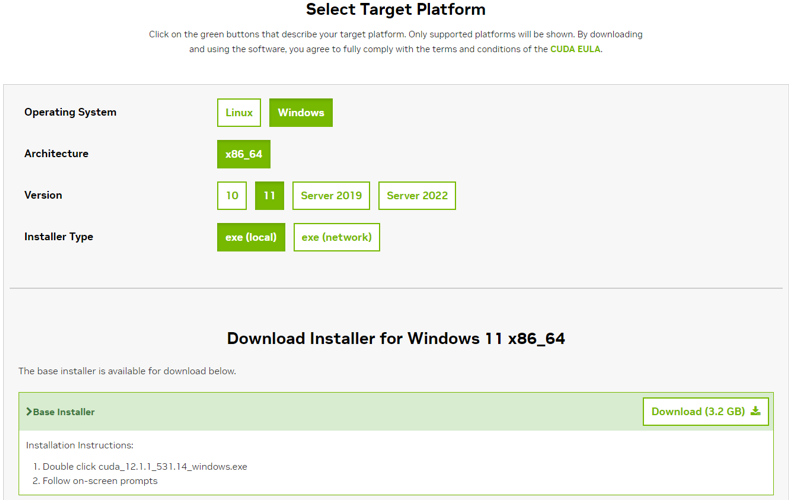

Download CUDA Toolkit from https://developer.nvidia.com/cuda-downloads

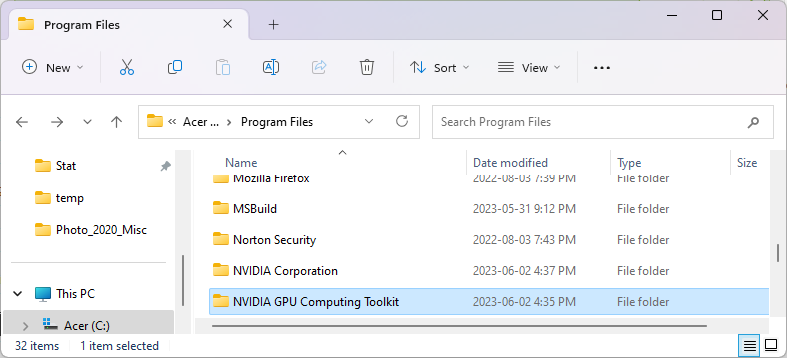

C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v12.1\bin

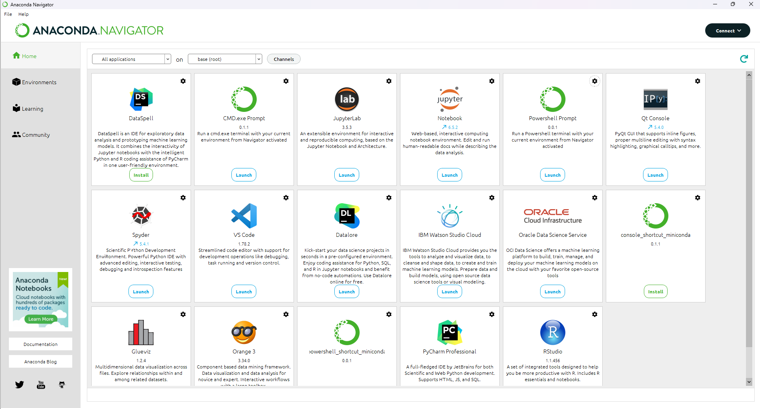

https://www.anaconda.com/download

|

|||||||||||